Blog

Your blog category

AI for Road Safety: Building Predictive, Hyperlocal Intelligence for Safer India

AI for Road Safety: Building Predictive, Hyperlocal Intelligence for Safer India Every four minutes, a life is lost on Indian roads. That statistic is not rhetorical. According to the Ministry of Road Transport and Highways (MoRTH), India recorded over 1.68 lakh road accident deaths in 2022, making it one of the highest road fatality figures globally. Behind each number is a family altered forever. Behind each crash lies a pattern, often predictable, sometimes preventable. For decades, road safety interventions have been reactive. Accidents occur. Authorities respond. Blackspots are identified after repeated incidents. Enforcement increases only after fatalities spike. But in a country as vast and dynamic as India, reacting is no longer enough. The next chapter in road safety is being written through AI-driven, predictive, hyperlocal intelligence, a model that shifts the focus from post-incident analysis to real-time prevention. The Scale of India’s Road Safety Challenge India has one of the world’s largest and most diverse road networks. From congested urban intersections to high-speed national highways and rural roads lacking basic signage, conditions vary dramatically within short distances. According to MoRTH’s Road Accidents in India report, over-speeding accounts for the majority of fatal crashes, followed by dangerous driving behaviors and infrastructure-related risks. The World Health Organization also highlights that low- and middle-income countries bear a disproportionate share of global road traffic deaths, despite having fewer vehicles relative to population. Traditional enforcement models, manual monitoring, periodic checks, and reactive policing; struggle to keep pace with this complexity. India needs solutions that operate continuously, scale efficiently, and adapt locally. What “Predictive, Hyperlocal Intelligence” Really Means Predictive road safety powered by AI goes beyond installing cameras at intersections. It combines video analytics, traffic data, environmental inputs, and behavioral modeling to anticipate risk in real time. Hyperlocal intelligence means analyzing road conditions at the micro level – specific intersections, pedestrian crossings, accident-prone stretches, school zones, or toll plazas. Instead of broad national averages, AI systems learn patterns unique to each location. For example, an urban junction may show repeated near-miss events between turning vehicles and pedestrians during peak evening hours. A highway segment may exhibit erratic lane switching before collisions. AI systems can detect these precursors, flag risk levels, and trigger preventive measures, before a fatal crash occurs. Research published in IEEE on intelligent transportation systems demonstrates that AI-based traffic analytics significantly improve early detection of hazardous behaviors compared to manual observation. The key shift is from counting accidents to predicting them. Real-Time Violation Detection and Behavior Monitoring AI-enabled road surveillance systems analyze live feeds to detect speeding, signal jumping, wrong-side driving, helmet violations, seatbelt non-compliance, and lane discipline issues. However, the real value lies not just in issuing challans, but in understanding behavior patterns. For instance, repeated red-light violations at a specific intersection may signal poor signal timing or visibility issues. High pedestrian conflict in a market area may indicate inadequate crossing infrastructure. According to the World Economic Forum, smart mobility systems that integrate real-time monitoring with predictive analytics can significantly reduce traffic fatalities when combined with targeted interventions. AI transforms enforcement from punitive to preventive. It helps authorities intervene early, through signage changes, road redesign, enforcement presence, or public awareness campaigns. Identifying and Managing Accident Blackspots Proactively India has historically identified “blackspots” based on past accident data. While useful, this method inherently looks backward. Predictive AI models can instead analyze near-miss incidents, sudden braking patterns, crowding behaviors, and abnormal vehicle trajectories to identify high-risk zones before fatalities spike. Studies in urban mobility research show that analyzing near-miss data provides earlier signals of systemic risk than waiting for confirmed accidents. This approach enables proactive infrastructure adjustments, improved lighting, rumble strips, speed calming measures, or lane redesign. In a country with high traffic density and mixed vehicle types from two-wheelers to heavy trucks, such foresight can be life-saving. Hyperlocal Insights for Smarter Urban Planning AI-powered road surveillance does more than detect violations; it generates actionable insights for planners. Heatmaps of pedestrian flow help design safer crossings. Vehicle trajectory data informs signal timing optimization. Congestion patterns reveal the need for flyovers or service lanes. School zone monitoring supports safer dispersal strategies. McKinsey’s research on smart cities emphasizes that data-driven mobility planning leads to measurable improvements in safety and efficiency. When traffic analytics feed into municipal dashboards, road safety becomes part of long-term urban intelligence. Hyperlocal analytics empower cities to move from broad policy to precise action. Emergency Response and Faster Intervention In road safety, response time matters. AI-enabled surveillance systems can automatically detect collisions, stalled vehicles, or sudden crowd formation after incidents. Immediate alerts to emergency services reduce delays in medical assistance. The World Bank notes that reducing post-crash response time significantly lowers mortality rates in road accidents. Automated incident detection systems ensure that help is dispatched quickly, even if bystanders do not report the crash immediately. In high-speed corridors and remote areas, this capability can make the difference between life and death. Ethical and Privacy Considerations Deploying AI on public roads must be done responsibly. Road surveillance systems capture public movement, making transparency and governance essential. Best practices prioritize behavior-based detection over intrusive identity tracking. Data minimization, secure storage, defined retention periods, and strict access controls are fundamental safeguards. Frameworks such as GDPR and UNESCO’s Recommendation on the Ethics of Artificial Intelligence emphasize proportionality, explainability, and accountability in AI systems. Ethical deployment ensures that road safety intelligence strengthens public trust rather than undermines it. Public communication about how data is used and how it protects citizens, plays a critical role in acceptance. The Role of IVIS in Building Safer Roads To enable predictive, hyperlocal road intelligence, cities and state authorities require scalable and integrated platforms. This is where IVIS plays a meaningful role. IVIS supports AI-driven video analytics across distributed road networks, integrating feeds from intersections, highways, toll booths, and urban corridors into a centralized intelligence framework. Through real-time violation detection, anomaly analysis, and risk scoring, IVIS enables authorities to move from reactive enforcement to predictive prevention. Its hybrid architecture allows edge-based analytics for low-latency decision-making while maintaining centralized dashboards for broader oversight. Configurable workflows ensure alerts reach traffic police, emergency responders, or municipal authorities instantly. Importantly, IVIS incorporates policy-driven governance and secure data handling, aligning road safety initiatives with regulatory and ethical standards. In practice, IVIS helps transform road surveillance into a comprehensive road safety intelligence system. Toward Vision

The Human Side of Intelligent Monitoring Systems

The Human Side of Intelligent Monitoring Systems As AI reshapes public safety and urban governance, the conversation often revolves around models, cameras, and cloud infrastructure. Less glamorous, but far more consequential is the human layer that sits between sensors and decisions: the surveillance operators, analysts, field responders, and policy-makers who must interpret AI outputs, act on them ethically, and keep the system resilient. The practical skilling pathways demanded by real-world surveillance, and how India’s summit-level momentum on skilling can be translated into safer streets and smarter command centres. Why “human” still matters in AI-driven surveillance? AI-powered CCTV and video analytics can detect anomalies, classify behaviour, and surface high-priority events at scale. Yet these technologies are not a replacement for judgement; they are amplifiers. Real-world deployments show that false positives, contextual blind spots, and socio-cultural nuances require a human-in-the-loop approach where trained personnel validate alerts, calibrate models, and make ethically informed interventions. Research into worker, AI coexistence argues that AI typically augments rather than outright replaces human role, provided organizations invest in complementary skills and learning systems. For Indian cities moving toward the Viksit Bharat 2047 vision, where technology-led public goods become an engine of inclusive growth, this human-technology partnership is critical. National strategy documents and recent summit discussions emphasise that skilling must keep pace with AI adoption if the promise of safer, fairer public systems is to be realised. The skills that matter for intelligent monitoring systems Intelligent monitoring requires a blend of technical capability, domain knowledge, and human-centric competencies. Training programs must therefore be multi-dimensional: Technical literacy for surveillance operators – understanding how video analytics work (object detection, tracking, anomaly scoring), interpreting confidence scores, and troubleshooting edge devices and connectivity issues. Practical labs with annotated footage and simulated incidents accelerate competence. Analyst and incident management skills — pattern recognition across time, incident triage, evidence preservation, and chain-of-custody basics. Analysts must be fluent with dashboarding tools and know how to translate model outputs into operational directives. Domain-specific judgement — public safety, crowd behaviour, road-traffic dynamics, and privacy-sensitive handling of footage. Contextual training (e.g., how behaviours differ in India’s urban and rural environments) reduces false alarms and community friction. Ethics, governance and legal literacy — human rights, data protection, bias mitigation, and community engagement protocols. Personnel must know when to escalate, when to de-escalate, and how to document decisions. Continuous learning and model stewardship — retraining pipelines, feedback loops that use operator-verified incidents to improve local models, and procedures to validate model drift over time. These capabilities align with the recommendations emerging from policy-level roadmaps that call for accelerated curriculum updates, industry–academia collaboration, and certification pathways to produce “AI-ready” human capital. From classroom to command centre: practical skilling pathways An effective skilling ecosystem for surveillance should combine four delivery modalities: Bootcamps and short-term certification — intensive, hands-on courses for operators that focus on tools, alerts handling, and incident simulation. Industry-embedded apprenticeships — placing trainees in municipal command centres or private security ops under mentorship to gain real incident experience. Higher education integration — modular AI and ethics courses in polytechnics and universities to prepare future analysts and system architects. Micro-credentials and continuous upskilling — bite-sized online modules for working staff to learn about new analytics features, model updates, and legal guidelines. India’s national initiatives and summit dialogues stress the urgency of such multi-pronged programs; public–private partnerships, including MoUs between industry, state governments, and academic institutions announced at the India AI Impact Summit, indicate momentum for scaling these pathways. Real-world constraints: bias, trust and operational realities Deployments often reveal three recurring constraints: Data bias and localisation gaps. Models trained on non-local datasets can misinterpret behaviour in Indian contexts. Culturally grounded datasets and hyperlocal model tuning are necessary to raise accuracy and public trust. Resource and connectivity limitations. Many municipal CCTV networks run on heterogeneous hardware and low-bandwidth links; skilling must include edge-first troubleshooting and graceful degradation strategies. Human factors under stress. Operators face alert fatigue, decision pressure during high-density events, and the moral burden of surveillance decisions. Training must therefore include stress resilience, scenario-based drills, and clear escalation protocols. Addressing these requires not just technical training but organisational investments—rotational staffing, psychological support, and governance frameworks that protect both citizens and operators. A playbook for industry–academia–government collaboration To operationalise large-scale, ethical monitoring, stakeholders should coordinate on three fronts: Curriculum co-design. Academia drafts syllabi while industry provides datasets, labs, and internship slots. This ensures graduates are job-ready for command centre roles. Standardised certifications. Government-endorsed certificates for surveillance operators and analysts create baseline trust and portability of skills across states and vendors. Model and data commons. Shared, privacy-preserving datasets for traffic, crowd behaviour, and incident types enable hyperlocal model tuning without monopolising training data. These steps mirror the strategic recommendations that India’s policy documents and summit panels have stressed, creating systems that scale while retaining local relevance and human oversight. Case study: skilling for road-safety monitoring Consider a city deploying a road-safety monitoring pod: AI flags sudden braking patterns, jaywalking clusters, and overspeeding near schools. A well-trained operator distinguishes weather-related false positives from genuine hazards, activates targeted driver-awareness campaigns, and collaborates with traffic police for quick interventions. The results are measurable: reduced incident response times, hyperlocal policy tweaks (e.g., temporary speed limits), and data-driven evidence for infrastructure fixes. This outcome is only possible when the operator has both technical familiarity with the analytics platform and contextual knowledge of local traffic behaviour, highlighting the labour-technology co-dependence. IVIS + Scanalitix: bridging people and platform At IVIS, we see the human side of intelligent monitoring as the competitive frontier. Technology alone cannot guarantee safer cities; it needs the scaffolding of skilling, policy, and collaborative deployment. That’s why our partnership model with Scanalitix focuses on three complementary pillars: Operational training integrated with the platform. Scanalitix’s analytics dashboards are paired with IVIS-run simulation labs where operators train on synthetic and anonymised local footage, practicing incident triage and escalation. Model stewardship and feedback loops. Every verified alert becomes a labelled data point that feeds Scanalitix’s hyperlocal model updates, reducing false positives and improving cultural relevance over time. Governance and auditability. IVIS helps design SOPs, evidence-handling processes, and privacy-preserving workflows so city administrators can deploy with accountability and public trust. By combining IVIS’s public-systems expertise with Scanalitix’s enterprise analytics, cities can not only deploy smart CCTV at scale but also incubate the human capital that makes those systems effective, ethical, and resilient. Closing: invest in people if you want smarter cities The India AI Impact Summit 2026 amplified a clear message: building an AI-led public infrastructure is as much a people

E-Surveillance for Reputation Protection – Preventing Incidents Before They Go Viral

E-Surveillance for Reputation Protection – Preventing Incidents Before They Go Viral A single incident. A 20-second video clip. A trending hashtag. In today’s hyperconnected world, that is often all it takes to trigger a brand crisis. A guest argument in a hotel lobby. A safety lapse in a mall escalator. A delayed response to a medical emergency on a university campus. Within minutes, bystanders record the moment, upload it, and share it across platforms. Before management has time to assess the situation, the narrative is already forming online. In the age of social media, physical incidents no longer remain local. They escalate into digital reputation events. This reality is why e-surveillance is evolving from a security function into a brand-risk management tool. Modern e-surveillance systems are not only designed to detect threats; they are increasingly deployed to prevent incidents from occurring in the first place, reducing the likelihood that they go viral. The Social Media Multiplier Effect The reputational stakes have never been higher. According to the World Economic Forum, reputational risk is now considered one of the top strategic risks facing organizations globally, amplified by real-time digital communication. A localized operational issue can rapidly transform into a global brand crisis. Research from Deloitte on crisis management indicates that companies experiencing viral incidents often suffer prolonged reputational and financial impact, including decreased customer trust and long-term revenue decline. What makes these events particularly challenging is their speed. Social media compresses reaction time from days to minutes. For hotels, malls, campuses, and public venues; spaces defined by high footfall and open access, the probability of incidents is naturally higher. The solution is not more reactive PR. It is earlier detection, smarter intervention, and operational foresight. From Security to Brand Protection Traditional surveillance focused on theft prevention and post-incident investigation. Cameras recorded footage for review after something went wrong. In a world without instant virality, that approach was often sufficient. Today, the goal has shifted. Organizations now ask: Can we detect escalating conflicts before they turn into public confrontations? Can we manage crowd surges before panic spreads? Can we identify service bottlenecks before frustration spills into social media outrage? Modern AI-powered video analytics make this possible by focusing on behavioural patterns rather than just rule violations. Surveillance becomes proactive not merely protective, but preventive. Predicting Flashpoints in Public-Facing Spaces Public venues share a common characteristic: dynamic human behavior. Crowd density changes quickly. Emotions fluctuate. Minor friction can escalate. Behavior-based analytics detect anomalies such as sudden crowd clustering, aggressive gestures, unusual loitering near sensitive areas, or erratic movement patterns. These signals often precede incidents. When flagged early, staff can intervene calmly and discreetly. Research published in IEEE journals on anomaly detection in public surveillance systems demonstrates that context-aware AI significantly improves early identification of risk scenarios compared to manual observation. The result is faster response and reduced escalation. In hospitality and retail, this translates into smoother guest experiences. In campuses, it can mean preventing altercations or safety issues before they spiral. Crowd Intelligence and Operational Foresight Not all viral incidents stem from misconduct. Many arise from operational failures, long queues, overcrowded exits, delayed responses, or perceived negligence. Crowd analytics help management anticipate congestion points and manage flow proactively. During peak check-in hours at hotels, sale events in malls, or major campus gatherings, predictive monitoring allows staff to deploy resources strategically. According to McKinsey’s research on operations optimization, data-driven management significantly improves service efficiency and reduces friction in customer-facing environments. When surveillance data feeds operational dashboards, it becomes a tool for experience management, not just security. By reducing frustration and confusion, organizations reduce the likelihood of moments that attract negative attention. Rapid Response: Containing the Narrative Even when incidents occur, speed and clarity are critical. Surveillance systems provide immediate situational awareness, enabling leadership to understand what happened in real time. Objective video evidence supports accurate communication with stakeholders, law enforcement, and the public. Instead of reacting to speculation, organizations can respond with verified information. PwC’s crisis management insights emphasize that organizations that respond quickly and transparently recover reputation faster than those that delay or rely on incomplete information. Surveillance-backed clarity becomes a strategic asset. Ethical Deployment: Trust as the Foundation Using surveillance as a brand-protection tool must be handled responsibly. Public-facing spaces depend on trust. Guests, shoppers, students, and visitors must feel protected, not watched or profiled. Best practices prioritize behaviour-based analytics rather than identity recognition. Private areas remain excluded. Clear signage, data governance policies, and defined retention periods ensure transparency. Frameworks such as GDPR and UNESCO’s Recommendation on the Ethics of Artificial Intelligence emphasize proportionality and accountability in AI-driven monitoring. Ethical deployment strengthens reputation rather than undermining it. Surveillance as a Strategic Risk Tool Forward-looking organizations increasingly treat surveillance data as part of enterprise risk management. It complements cybersecurity monitoring, operational analytics, and reputation tracking. In hotels, predictive e-surveillance identifies guest dissatisfaction hotspots. In malls, it monitors safety compliance and crowd dynamics. On campuses, it supports incident prevention and emergency readiness. By integrating video intelligence into executive dashboards, organizations shift from reactive damage control to preventive brand stewardship. The Role of IVIS in Reputation-Focused Surveillance To protect reputation effectively, organizations require unified visibility across locations and systems. This is where IVIS plays a strategic role. IVIS in collab with Scanalitix, enables centralized monitoring across distributed properties like hotels, malls, campuses, and public venues, transforming surveillance data into actionable insights. Through AI-driven video analytics and contextual risk scoring, IVIS supports early detection of anomalies that may signal escalating incidents. Its hybrid architecture, combining edge processing with centralized oversight ensures rapid response without compromising governance. Configurable workflows allow alerts to escalate to appropriate teams quickly, enabling discreet and timely intervention. Importantly, IVIS incorporates policy-driven controls, audit trails, and secure access management. This alignment of intelligence with governance ensures that reputation protection remains ethical and compliant. In practice, IVIS helps organizations move beyond security toward proactive reputation resilience, preventing moments that could otherwise dominate headlines. Looking Ahead: The Future of Brand-Integrated E-Surveillance As social media ecosystems evolve, so will public scrutiny. The next phase of surveillance innovation will integrate predictive analytics with operational data, event schedules, and sentiment analysis tools. Digital twins of public spaces may simulate crowd scenarios in advance. AI models will refine escalation detection with greater contextual awareness. Edge computing will enable instant local action. At the same time, regulatory oversight will intensify.

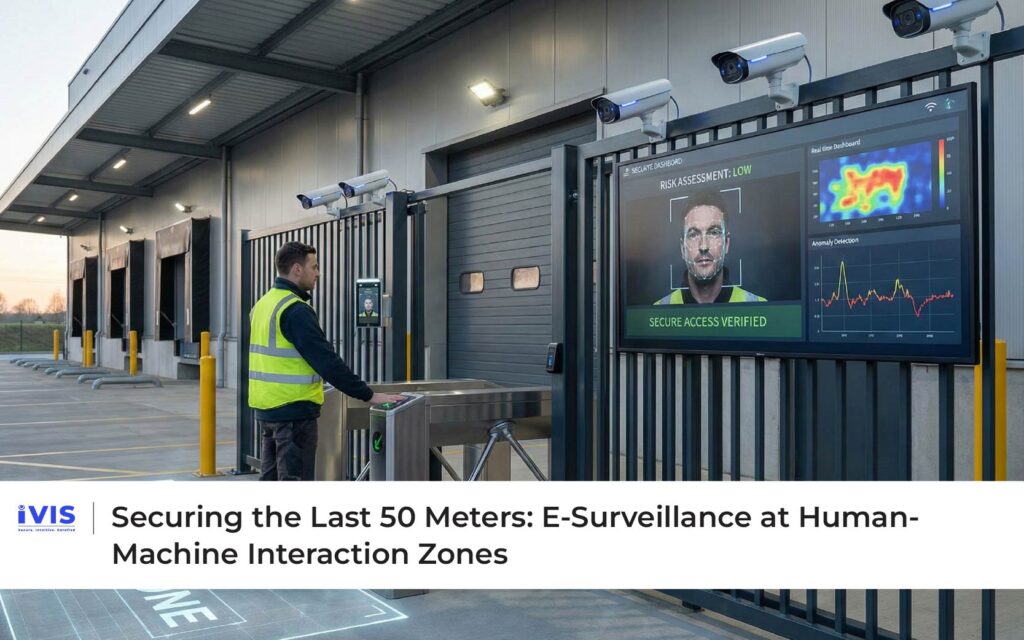

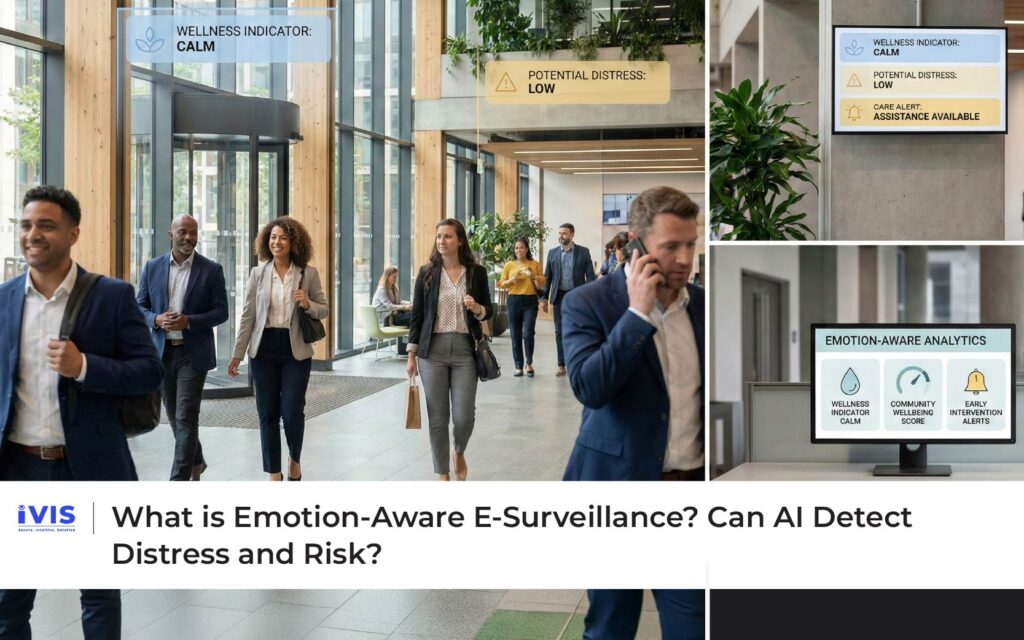

What is Emotion-Aware E-Surveillance? Can AI Detect Distress and Risk?

What is Emotion-Aware E-Surveillance? Can AI Detect Distress and Risk? In a busy hospital corridor, a patient moves more slowly than usual, pausing frequently and leaning against the wall. In a metro station, a commuter’s speed changes abruptly, signaling fatigue or distress. On a university campus, a student lingers in an isolated area far longer than normal. None of these moments are crimes. Yet each may signal risk -medical, emotional, or safety-related, where timely support can make a critical difference. This is the emerging promise of emotion-aware surveillance. Unlike traditional monitoring that looks for rule violations, emotion-aware systems aim to detect signals of distress and risk through behavior, posture, movement patterns, and context. In 2026, this capability sits at the center of an important debate: how can AI help protect people without crossing ethical lines? Why Detecting Distress Matters in Modern Spaces Public and semi-public environments have grown more complex. Hospitals operate under constant pressure. Transport spots like metro stations and bus stops manage massive daily footfall. Educational campuses and workplaces bring together diverse populations with varying vulnerabilities. In these settings, risk often appears before an incident through subtle behavioural hints rather than obvious alarms. Research in public safety and healthcare consistently shows that early intervention reduces harm. The World Health Organization has emphasized the importance of early detection in preventing escalation of medical and mental health crises. However, relying solely on human observation is challenging at scale. Staff cannot watch everywhere at once, and signs of distress are often easy to miss. Emotion-aware surveillance addresses this gap by augmenting human awareness, not replacing it, surfacing early indicators so that people can respond with care. What Emotion-Aware Surveillance Really Is? Emotion-aware surveillance is frequently misunderstood. It is not about reading minds, labeling emotions, or assigning intent. Ethical implementations avoid speculative emotion classification from facial expressions alone, a practice widely criticized for inaccuracy and bias. Instead, modern systems focus on observable, context-driven behaviors: changes in gait, posture, dwell time, erratic movement, crowd interaction, or deviations from an individual’s normal pattern within a given environment. These signals are then interpreted probabilistically, with thresholds designed to flag potential risk, not definitive conclusions. Peer-reviewed research published in IEEE journals highlights that behavior-based analytics are more reliable and less intrusive than facial emotion recognition, especially in real-world settings. This distinction is critical to ethical deployment. From Reaction to Prevention: How the Technology Works Emotion-aware surveillance builds on three core capabilities. First, AI models learn what “normal” looks like in a specific environment – how people typically move, interact, and occupy space at different times. Second, they detect deviations that may indicate distress or vulnerability. Third, they contextualize these signals using location, time, and surrounding activity to assess risk. For example, a prolonged constant halt in a hospital hallway may signal fatigue or medical distress, while similar behavior in a shopping mall may be inconsequential. Context prevents overreaction and reduces false alarms. Crucially, these systems do not act in isolation. Alerts are designed to prompt human review and compassionate intervention, such as a staff check-in or medical assessment. Use Cases Across Sectors Emotion-aware surveillance has practical applications across multiple sectors. In healthcare, it supports fall-risk detection, patient deterioration alerts, and staff safety monitoring. Studies indicate that posture and walking speed analysis can significantly improve early detection of falls and medical emergencies in clinical environments. In transport and public infrastructure, these systems help identify individuals in distress, manage crowd anxiety during disruptions, and enable faster assistance. Transport authorities globally are exploring behavior-based analytics to improve passenger safety without intrusive monitoring. In education, particularly universities and large campuses, emotion-aware surveillance can flag unusual isolation or distress patterns, allowing support teams to intervene early. Importantly, this is about safeguarding well-being, not discipline or profiling. Ethics at the Core: Why Guardrails Matter The ethical risks of emotion-aware surveillance are real. Misinterpretation, bias, and overreach can erode trust quickly. That is why governance and design choices matter as much as algorithms. Leading global frameworks emphasize caution. UNESCO’s Recommendation on the Ethics of Artificial Intelligence explicitly warns against speculative emotion inference and stresses proportionality, transparency, and human oversight. Systems must be explainable, limited in scope, and aligned with human rights. Best-practice deployments therefore adopt several principles: behavior-based analytics over identity tracking; no use in private spaces; clear escalation paths to humans; and strong auditability. These guardrails ensure that technology supports care, not control. Privacy-First Design and Trust Trust is foundational. Emotion-aware surveillance must be privacy-first by design. That means minimizing data collection, avoiding identity recognition unless legally justified, encrypting data, restricting access, and defining strict retention policies. Regulatory regimes such as GDPR reinforce these requirements, particularly in sensitive environments like healthcare and education. Transparency in clear signage, published policies, and staff training, helps people understand why monitoring exists and how it protects them. Research by the World Economic Forum underscores that public acceptance of AI in shared spaces increases when systems are transparent, purpose-limited, and demonstrably beneficial. Human-in-the-Loop: Keeping Care Central Emotion-aware surveillance should never operate as an autonomous judge. Ethical models are human-in-the-loop or human-on-the-loop by design. AI surfaces signals; people decide responses. This approach improves outcomes and reduces risk. Trained staff can interpret context, provide assistance, and de-escalate situations with empathy. The technology’s role is to ensure that no one falls through the cracks when attention is stretched thin. The Role of IVIS in Responsible Emotion-Aware Surveillance Deploying emotion-aware surveillance responsibly requires platforms that combine intelligence with governance. This is where IVIS plays a meaningful role. IVIS in collaboration with Scanalitix, enables organizations to apply behaviour-based video analytics within clearly defined policies, ensuring alerts focus on risk indicators rather than identity profiling. Its architecture supports edge processing, reducing latency and data exposure, while maintaining centralized oversight for consistency and compliance. Configurable workflows ensure that alerts escalate to humans for review and compassionate response. By embedding audit trails, access controls, and transparent reporting, IVIS aligns advanced analytics with ethical standards and regulatory expectations. In practice, IVIS helps organizations move toward care-centric surveillance, using intelligence to protect people while preserving trust. What the Future Holds Looking ahead, emotion-aware surveillance will become more contextual, restrained, and accountable. Advances in multimodal AI combining video with environmental and operational data, will improve accuracy without increasing intrusiveness. Federated learning and edge AI will further reduce privacy risks. At the same time, scrutiny will intensify. Regulators, institutions, and communities will demand proof that these systems help rather than harm. Success will belong to solutions that pair technical capability with ethical discipline. Conclusion Emotion-aware surveillance represents a shift in how we think about safety from enforcement to empathy, from reaction to

The Rise of Video Intelligence in Compliance Auditing

The Rise of Video Intelligence in Compliance Auditing Compliance has always been a cornerstone of regulated industries. Whether in banking, healthcare, manufacturing, energy, or public infrastructure, organizations are expected to prove that processes are followed, risks are controlled, and people are protected. Traditionally, compliance auditing relied heavily on documentation, manual inspections, and periodic reporting. While these methods served their purpose, they were often time-consuming, reactive, and limited in scope. Today, that model is changing. Video intelligence is the combination of video surveillance with AI-powered analytics, is reshaping how compliance is monitored, validated, and enforced. Instead of relying solely on paper trails and after-the-fact checks, organizations are using visual data to gain real-time, objective evidence of compliance. Auditing is no longer just about reviewing the past; it is increasingly about continuous assurance. Why Traditional Compliance Audits Are Under Pressure Modern compliance requirements are growing in both complexity and frequency. Regulators expect higher transparency, faster reporting, and stronger proof that controls are effective. At the same time, organizations operate at greater scale, across distributed locations and hybrid environments. Manual audits struggle to keep up with this pace. Site visits are expensive and infrequent. Self-reported checklists are prone to error or bias. Gaps between audits allow non-compliance to go unnoticed until it becomes a serious issue. According to Deloitte, organizations that rely solely on periodic audits face higher operational and regulatory risk because violations often occur between reporting cycles. This has driven a shift toward continuous monitoring models, where compliance is validated as operations happen, not weeks or months later. What Video Intelligence Brings to Compliance Auditing Video intelligence transforms surveillance footage into structured, searchable, and actionable data. Instead of passively recording video, AI models analyze scenes to detect behaviors, conditions, and deviations from defined standards. For example, in an industrial facility, video analytics can verify whether workers are wearing required personal protective equipment, whether restricted zones are respected, or whether safety procedures are followed consistently. In BFSI environments, video intelligence can validate access control compliance, monitor sensitive areas, and ensure procedural adherence during cash handling. Research published in IEEE Access shows that intelligent video analytics significantly improve accuracy in detecting rule violations compared to manual observation, while also reducing human bias. Video becomes not just evidence, but insight. From Periodic Audits to Continuous Compliance One of the most significant impacts of video intelligence is the move from periodic audits to continuous compliance auditing. Instead of sampling behavior at fixed intervals, organizations gain ongoing visibility into operations. This approach allows compliance teams to identify trends, not just isolated incidents. Repeated minor violations, which might go unnoticed in traditional audits, become visible through pattern analysis. Early intervention becomes possible, reducing the likelihood of major breaches or penalties. The World Economic Forum highlights continuous monitoring as a key pillar of modern governance, especially in highly regulated and safety-critical sectors. Video intelligence supports this model by providing an always-on validation layer. Objective Evidence and Audit Readiness Compliance audits often hinge on evidence. Video intelligence provides objective, time-stamped records that are difficult to dispute. Unlike written reports or verbal confirmations, visual data offers direct proof of what occurred. This is particularly valuable during regulatory inspections or internal investigations. Auditors can review specific events, verify corrective actions, and assess whether controls are effective in practice. Video analytics can also generate automated compliance reports, reducing manual effort and speeding up audit cycles. According to PwC, organizations that leverage digital evidence and automation in compliance processes report faster audits and lower operational disruption. Video intelligence strengthens audit readiness by making evidence accessible and reliable. Balancing Compliance with Privacy and Ethics While video intelligence offers powerful compliance benefits, it must be deployed responsibly. Compliance monitoring should not come at the expense of privacy, dignity, or trust. Modern systems increasingly rely on behaviour-based analytics rather than identity recognition. The focus is on what is happening, not who is involved, unless identification is legally required. Private areas are excluded, access to footage is controlled, and retention periods are clearly defined. Regulations such as GDPR and emerging data protection laws emphasize proportionality, transparency, and accountability in monitoring systems. UNESCO’s Recommendation on the Ethics of Artificial Intelligence reinforces that AI-driven oversight must respect human rights and be explainable. Ethical deployment is essential to ensuring that video intelligence strengthens governance rather than undermines it. Cross-Industry Applications of Video-Driven Compliance The rise of video intelligence in compliance auditing spans multiple sectors. In manufacturing, it supports safety compliance, quality control, and process adherence. In energy and utilities, it helps validate perimeter security, operational protocols, and contractor safety. In healthcare, it supports hygiene standards, access control, and patient safety processes. In BFSI, it strengthens oversight of sensitive operations and physical security controls. Across these sectors, the common thread is the need for verifiable, real-time compliance assurance, something traditional audits alone cannot provide. The Role of IVIS in Video-Driven Compliance Auditing As compliance requirements evolve, organizations need platforms that can unify video intelligence with governance and reporting. This is where IVIS plays a meaningful role. IVIS enables organizations to centralize video analytics across locations and environments, transforming surveillance data into actionable compliance insights. By applying AI-driven analytics to live and recorded video, IVIS supports continuous monitoring of policies, procedures, and safety standards. Alerts, logs, and audit trails help compliance teams identify gaps early and document corrective actions clearly. Designed for hybrid deployments across edge, on-prem, and cloud, IVIS ensures that compliance monitoring remains scalable and secure. Policy-driven access controls and configurable retention support regulatory alignment, while centralized dashboards simplify audit preparation. In practice, IVIS helps organizations move from reactive audits to proactive compliance intelligence. What the Future Holds for Compliance Auditing The future of compliance auditing will be increasingly automated, data-driven, and predictive. Video intelligence will integrate with other enterprise system access control, IoT sensors, and risk management platforms to provide a holistic view of compliance posture. AI models will not only detect violations, but also predict areas of elevated risk based on historical trends and contextual factors. This will allow organizations to allocate resources more effectively and prevent non-compliance before it occurs. As regulators continue to demand transparency and accountability, video intelligence will become a standard component of compliance frameworks, not an optional enhancement. Conclusion Compliance auditing is evolving from a retrospective exercise into a continuous, intelligence-led discipline. Video intelligence sits at the heart of this transformation, providing real-time visibility, objective evidence, and actionable insight. When

The Evolution from Monitoring Rooms to Decision Engines

The Evolution from Monitoring Rooms to Decision Engines Not long ago, surveillance command centers were quiet, dimly lit rooms filled with video walls and rows of operators watching dozens, sometimes hundreds of camera feeds. Their job was simple in theory but exhausting in practice: observe, wait, and respond when something went wrong. These monitoring rooms were the nerve centers of security operations, yet they were largely reactive, dependent on human attention and hindsight. Today, that model is undergoing a fundamental transformation. Modern command centers are no longer just places where footage is watched. They are becoming decision engines, intelligent hubs that analyze data, predict outcomes, and orchestrate responses across people, systems, and locations. This evolution reflects a broader shift in surveillance itself: from passive monitoring to active, intelligence-driven decision-making. Why Traditional Monitoring Rooms Reached Their Limits The limitations of traditional monitoring rooms were not due to lack of effort, but to human constraints. Operators are expected to monitor multiple screens for long periods, identify anomalies, and make quick judgments under pressure. Research has consistently shown that sustained attention degrades rapidly in such settings, increasing the risk of missed events. At the same time, the scale of surveillance has expanded dramatically. Cities deploy thousands of cameras. Enterprises manage distributed facilities across regions. Public infrastructure, transport hubs, and campuses generate vast volumes of real-time data. Monitoring rooms designed for a simpler era struggle to keep pace with this complexity. According to studies cited by the World Economic Forum, the gap between data generation and human decision capacity is one of the biggest challenges in modern security operations. This gap is what set the stage for the rise of analytics-driven command centers. The Shift Toward Intelligence-Led Operations The first step in this evolution was the introduction of video analytics. Instead of relying solely on human eyes, systems began to detect motion, count people, and flag predefined events. While helpful, early analytics were still limited they reacted to individual triggers rather than understanding context. The real transformation began when analytics matured into contextual intelligence. AI models started correlating data across cameras, timeframes, and sources. They learned patterns of normal behavior and identified anomalies that suggested emerging risks. Command centers could now prioritize alerts, reduce false positives, and focus attention where it mattered most. Research published in IEEE journals highlights that context-aware analytics significantly improve detection accuracy and response efficiency. This marked the transition from monitoring rooms to environments that assist decision-making, rather than simply presenting information. From Screens to Situational Awareness Modern decision engines are built around situational awareness, not screen density. Instead of overwhelming operators with raw feeds, they synthesize information into actionable insights. Dashboards present what is happening, why it matters, and what actions are recommended. For example, a command center overseeing a large campus might receive a single prioritized alert indicating abnormal crowd behavior near an exit, supported by video snippets, historical context, and suggested responses. This is a far cry from manually scanning dozens of feeds hoping to notice something unusual. According to McKinsey, organizations that move from data monitoring to decision support see faster response times and more consistent outcomes. In surveillance, this means fewer missed incidents and better coordination during critical moments. Decision Engines and Predictive Capability The evolution does not stop at real-time awareness. The most advanced command centers now incorporate predictive analytics. By analyzing historical data alongside live inputs, decision engines can forecast risks before they materialize. In transport systems, this may involve predicting congestion or safety incidents based on time, events, and past patterns. In industrial environments, it may mean anticipating unsafe interactions between humans and machines. In public spaces, it can involve forecasting crowd surges or emergency response needs. The World Bank notes that predictive, data-driven decision systems improve resilience by enabling proactive interventions rather than reactive fixes. Command centers that adopt this approach move from managing incidents to preventing them. Integration: The Heart of a True Decision Engine What truly distinguishes a decision engine from a monitoring room is integration. Modern command centers do not operate in isolation. They ingest data from video systems, access controls, IoT sensors, alarms, and operational platforms. By correlating these inputs, decision engines provide a holistic view of the environment. A door access alert gains meaning when paired with video analytics. A sensor anomaly becomes actionable when visual confirmation is available. This integration reduces ambiguity and speeds decision-making. Industry frameworks for smart cities and critical infrastructure consistently emphasize integration as a cornerstone of effective command centers. Without it, even the most advanced analytics remain siloed. Human Roles in the Age of Decision Engines As command centers evolve, the role of humans evolves with them. Operators are no longer passive watchers. They become decision supervisors, validating insights, managing exceptions, and coordinating responses. This shift reduces fatigue and improves job satisfaction. Instead of staring at screens, teams focus on judgment, communication, and continuous improvement. Importantly, humans remain accountable for high-impact decisions, ensuring ethical and legal oversight. Standards bodies such as NIST stress the importance of human-in-the-loop or human-on-the-loop models for AI-driven systems. Decision engines enhance human capability; they do not replace it. The Role of IVIS in the Evolution to Decision Engines As organizations transition from monitoring rooms to decision engines, they need platforms designed for orchestration, intelligence, and governance. This is where IVIS plays a meaningful role. IVIS enables command centers to move beyond passive monitoring by unifying video analytics, contextual data, and operational workflows into a single intelligent platform. Instead of presenting raw feeds, IVIS supports prioritized alerts, real-time insights, and coordinated response mechanisms. Its architecture allows data to be processed at the edge for speed, while maintaining centralized oversight for strategy and compliance. By embedding policy-driven controls, audit trails, and scalable integration, IVIS ensures that decision engines remain transparent, secure, and aligned with regulatory requirements. In practice, IVIS helps organizations transform their command centers into operational brains, places where information becomes insight, and insight becomes action. Ethics, Governance, and Trust As decision engines gain autonomy and influence, governance becomes critical. Clear rules must define what systems can decide automatically and what requires human approval. Transparency and explainability are essential to maintaining trust among stakeholders. Global frameworks on AI ethics emphasize accountability, proportionality, and oversight. Command centers that adopt these principles ensure that intelligence serves safety and efficiency without crossing ethical boundaries. Trust is not

The Rise of Autonomous E-Surveillance: When Systems Decide Before Humans Do?

The Rise of Autonomous E-Surveillance: When Systems Decide Before Humans Do? For decades, e-surveillance has followed a familiar rhythm. Cameras have always just been observing in the background and humans interpreted. Decisions came later. But today, that rhythm is breaking. In airports, factories, campuses, and cities, e-surveillance systems are no longer waiting for human input. They are detecting, assessing, and acting, sometimes within milliseconds. This shift marks the rise of autonomous e-surveillance. Powered by AI, edge computing, and predictive analytics, these systems don’t just flag events; they decide what matters and trigger responses automatically. It’s a powerful evolution, one that promises speed, scale, and consistency. It also raises important questions about control, accountability, and trust. Why E-Surveillance Is Moving Toward Autonomy? The primary driver of autonomy is scale. Modern environments generate far more video and sensor data than humans can process in real time. Large facilities can deploy thousands of cameras; cities deploy tens of thousands. Even the most attentive operators face fatigue and cognitive overload. Research consistently shows that human attention degrades quickly when monitoring multiple video feeds for extended periods. At the same time, threats have become faster and more complex, ranging from coordinated intrusions to safety incidents that escalate in seconds. Waiting for manual review can mean missed opportunities to prevent harm. Autonomous e-surveillance addresses this gap by enabling systems to analyze continuously and act immediately. Decisions that once took minutes or never happened, now occur in real time. What “Autonomous” Really Means in E-Surveillance Autonomous surveillance does not imply machines acting blindly. It refers to systems that can detect, evaluate, and initiate predefined actions without waiting for human approval, within carefully defined boundaries. These systems combine computer vision, machine learning, and rule-based orchestration. They learn what “normal” looks like in a given environment, identify deviations, assess risk, and execute responses. Responses may include sending alerts, locking doors, activating alarms, adjusting camera focus, or notifying emergency teams. Importantly, autonomy exists on a spectrum. In many deployments, systems act autonomously for low-risk or time-critical events while escalating complex or high-impact decisions to humans. This hybrid model preserves oversight while capturing the benefits of speed. From Detection to Decision in Real Time Traditional analytics detect events motion, entry, or thresholds. Autonomous surveillance goes further by interpreting context. It correlates behavior over time, across cameras and sensors, to infer intent or risk. For example, a single person standing near a restricted area may not trigger action. But repeated loitering, combined with time-of-day patterns and failed access attempts, may cross a risk threshold. An autonomous system can decide to escalate immediately, rather than waiting for an operator to connect the dots. Studies published in IEEE journals show that multi-sensor, context-aware analytics significantly outperform single-event detection in identifying genuine risks while reducing false positives. Autonomy depends on this contextual intelligence to make reliable decisions. Edge Computing: The Enabler of Autonomous Action Autonomy requires speed. Sending every frame to a centralized cloud introduces latency and dependency on connectivity. Edge computing solves this by processing data close to the source, inside cameras or local gateways. Edge-based autonomy enables instant decisions even in remote or bandwidth-constrained locations. If a perimeter breach occurs at a substation or an after-hours intrusion is detected at a warehouse, the system can act locally within milliseconds. Industry analyses note that edge analytics are essential for time-critical AI workloads. In surveillance, autonomy without edge processing is often impractical. Operational Benefits Across Sectors Autonomous e-surveillance is already reshaping operations across industries. In transport hubs, systems manage crowd flow, trigger alerts for unattended objects, and coordinate responses without waiting for manual confirmation. In manufacturing, autonomous surveillance can stop machinery or restrict access when unsafe conditions are detected. In education and healthcare, it can initiate emergency protocols during incidents where seconds matter. The World Economic Forum highlights that autonomy in monitoring systems improves resilience by reducing response times and standardizing actions during high-stress events. The benefit is not just speed, but consistency, actions are executed exactly as designed, every time. Human Oversight Still Matters Autonomy does not eliminate the human role; it redefines it. Humans move from constant monitoring to strategic oversight. They design rules, validate outcomes, review escalations, and handle exceptions. This shift reduces fatigue and improves decision quality. Instead of watching screens, teams focus on judgment, coordination, and improvement. When autonomy is implemented responsibly, it augments human capability rather than replacing it. Standards bodies emphasize the importance of human-in-the-loop or human-on-the-loop models, particularly for decisions with legal, ethical, or safety implications. Autonomy should accelerate action, not bypass accountability. Ethics, Governance, and Trust As systems decide more, governance becomes critical. Autonomous surveillance must operate within clear ethical and regulatory frameworks. Transparency, proportionality, and auditability are essential to maintain trust. Autonomous actions should be explainable, organizations must understand why a system acted and be able to review outcomes. Policies should define which decisions can be automated and which require human approval. Data minimization and privacy-preserving analytics help ensure that autonomy does not become overreach. International guidance on AI ethics consistently stresses that autonomy must be bounded by human values and oversight. Trust in autonomous surveillance depends on disciplined design and governance as much as technical performance. The Role of IVIS in Autonomous E-Surveillance As organizations adopt autonomy, they need platforms that can orchestrate decisions responsibly across devices, sites, and systems. This is where IVIS plays a meaningful role. IVIS enables autonomous e-surveillance by unifying real-time video analytics, contextual data, and rule-based orchestration within a single operational platform. It supports edge-based decision-making for time-critical events while maintaining centralized visibility and control. Policies define what actions the system can take autonomously and when escalation is required. By combining autonomy with governance secure access, audit trails, and configurable workflows, IVIS helps organizations move toward faster, more reliable responses without sacrificing accountability. In practice, IVIS supports a measured transition from human-driven monitoring to autonomous decision support. What Comes Next The trajectory is clear. Surveillance systems will continue to gain autonomy as AI models improve and integration deepens. Future platforms will simulate scenarios, recommend actions, and coordinate responses across teams and systems. At the same time, scrutiny will increase. Regulators, employees, and the public will demand assurance that autonomous decisions are fair, explainable, and reversible. Success will belong to systems that combine speed with restraint, and automation with oversight. Conclusion Autonomous e-surveillance represents a fundamental shift, from watching to deciding, from reacting to anticipating. When designed

Cyber-Resilient E-Surveillance Systems: Securing the Security Infrastructure Itself

Cyber-Resilient E-Surveillance Systems: Securing the Security Infrastructure Itself E-surveillance systems are designed to protect organizations, people, and critical assets. Yet in an increasingly connected world, these systems themselves have become attractive targets. Cameras, recorders, analytics engines, and command platforms now sit on IP networks, integrate with cloud services, and exchange data with access control, IoT sensors, and enterprise systems. When the security infrastructure is compromised, it doesn’t just fail, it becomes a liability. This reality has elevated cyber resilience from an IT concern to a core surveillance requirement. Modern e-surveillance must be secure by design, resilient by architecture, and governed by policy. The goal is no longer only to watch threats, it is to withstand attacks, continue operating, and recover quickly when adversaries target the surveillance stack itself. Why E-Surveillance Systems Are Prime Cyber Targets? The attack surface of surveillance has expanded rapidly. High-resolution IP cameras, network video recorders (NVRs), AI analytics services, and remote monitoring tools are often deployed at scale across sites. Many operate continuously, expose management interfaces, and store sensitive data. This combination makes them attractive to attackers seeking entry points, data exfiltration, or disruption. Industry analyses show that poorly secured cameras and recorders are frequently exploited through weak credentials, outdated firmware, or misconfigured networks. Once compromised, attackers can disable monitoring, manipulate footage, pivot into adjacent networks, or use devices as part of botnets. For organizations relying on surveillance for safety and compliance, such breaches undermine trust and operational continuity. Cyber resilience addresses this risk by assuming that attacks will happen and designing systems to limit blast radius, maintain visibility, and recover fast. What Cyber-Resilient E-Surveillance Really Means Cyber-resilient surveillance goes beyond perimeter defenses. It blends security controls, resilient architecture, and operational discipline into a cohesive approach. The objective is to protect confidentiality, integrity, and availability without sacrificing performance or scalability. At a practical level, this includes hardened devices, encrypted communications, strong identity and access management, network segmentation, continuous monitoring, and secure update mechanisms. Equally important are governance practices: defined data retention, audit trails, incident response playbooks, and regular testing. Research from standards bodies and industry consortia consistently emphasizes that resilience is a lifecycle commitment spanning design, deployment, operations, and response. Device Hardening: The First Line of Defense Cameras and edge devices are the foundation of surveillance and a common point of failure. Cyber-resilient deployments start with secure hardware and firmware. This includes secure boot, signed firmware updates, disabled default credentials, and tamper detection. Hardening also means minimizing exposed services and enforcing least-privilege access. Devices should communicate only with authorized systems using encrypted protocols. When vulnerabilities are discovered, rapid and authenticated patching is essential to prevent exploitation at scale. Independent security advisories repeatedly highlight that many breaches originate at the device layer. Strengthening this foundation dramatically reduces risk across the entire surveillance ecosystem. Network Segmentation and Zero-Trust Principles Modern surveillance networks should not be flat. Cyber resilience depends on segmentation isolating cameras, recorders, analytics engines, and management interfaces from business IT and each other where appropriate. This limits lateral movement if one component is compromised. Zero-trust principles further strengthen defenses by requiring verification for every access request, regardless of location. Authentication, authorization, and continuous validation replace implicit trust. In practice, this means role-based access, multi-factor authentication for administrators, and strict API controls for integrations. Guidance from national cybersecurity agencies consistently recommends segmentation and zero-trust models for operational technology and IoT environments, including surveillance. Protecting Data: Encryption, Integrity, and Governance Surveillance systems generate sensitive data video footage, metadata, analytics results, and operational logs. Cyber-resilient architectures protect this data in transit and at rest using strong encryption. Integrity checks ensure footage cannot be altered without detection, preserving evidentiary value and auditability. Governance matters as much as cryptography. Clear policies define who can access data, for what purpose, and for how long. Retention schedules reduce exposure by deleting data when it is no longer required. Comprehensive logging and audit trails support investigations and compliance. Privacy regulations and sector-specific standards increasingly expect these controls as a baseline, not an optional add-on. Continuous Monitoring and Incident Readiness Resilience is proven under pressure. Cyber-resilient e-surveillance systems include continuous monitoring for anomalies—unusual access attempts, configuration changes, traffic spikes, or device failures. These signals feed alerts and automated responses that contain threats early. Equally critical is preparedness. Incident response plans define how teams isolate affected components, preserve evidence, restore services, and communicate with stakeholders. Regular drills and tabletop exercises ensure readiness when incidents occur. Industry studies show that organizations with practiced response plans restore operations faster and incur lower breach costs than those reacting ad hoc. Cloud, Edge, and Hybrid: Designing for Resilience Surveillance increasingly spans edge devices, on-prem systems, and cloud services. Cyber resilience requires thoughtful distribution of workloads and controls across this hybrid landscape. Edge analytics reduce data exposure and latency by processing locally, while centralized platforms provide oversight, correlation, and governance. Redundancy and failover ensure that loss of a single component does not blind the system. Secure APIs enable integration without expanding the attack surface. Architectures that balance edge autonomy with centralized control are better positioned to absorb shocks and maintain service. The Role of IVIS in Cyber-Resilient E-surveillance As surveillance environments grow more connected, organizations need platforms that embed security and resilience into everyday operations. This is where IVIS plays a meaningful role. IVIS is designed to unify surveillance across devices, sites, and environments while applying policy-driven security controls throughout the stack. By supporting encrypted communications, role-based access, and centralized monitoring, IVIS helps organizations maintain visibility without compromising protection. Its hybrid architecture enables resilient deployments across edge, on-prem, and cloud. Importantly, IVIS aligns technical safeguards with governance. Audit trails, configurable retention, and controlled integrations help organizations meet regulatory expectations and respond confidently to incidents. In practice, IVIS supports a shift from reactive defense to operational cyber resilience, securing the security infrastructure itself. Conclusion E-surveillance systems exist to protect, but they must also be protected. Cyber-resilient surveillance acknowledges the reality of modern threats and responds with layered defenses, resilient architectures, and disciplined operations. By hardening devices, segmenting networks, protecting data, and preparing for incidents, organizations can ensure their surveillance infrastructure remains trustworthy and effective. Platforms like IVIS demonstrate how resilience can be built into surveillance from the ground up, securing not just assets and people, but the very systems entrusted with their protection.

Hotels That Think Ahead: How Predictive E-Surveillance Is Shaping Guest Experience?

Hotels That Think Ahead: How Predictive E-Surveillance Is Shaping Guest Experience? A guest walks into a hotel lobby after a long journey. The space feels calm, organized, and welcoming. There are no visible signs of tension, no delays at the front desk, no congestion near elevators. Behind this smooth experience is something guests rarely notice, but deeply appreciate: a hotel that anticipates rather than reacts. Hospitality has always been about foresight. Knowing what guests need before they ask. Today, that philosophy extends into security and operations through predictive e-surveillance. Instead of merely recording events or responding after incidents occur, modern surveillance systems help hotels anticipate risks, manage crowd flow, and support service delivery in real time. In an industry where experience defines reputation, predictive surveillance is becoming a quiet differentiator. Why Hotels Need Predictive, Not Reactive, Surveillance Hotels operate in a uniquely open environment. Lobbies are public-facing. Guests, visitors, vendors, and staff move constantly through shared spaces. Events, peak seasons, and late-night activity add layers of complexity. Traditional surveillance systems, designed primarily for post-incident review—struggle to keep up with this dynamic flow. According to the World Tourism Organization, guest perceptions of safety significantly influence satisfaction and return intent. At the same time, the hospitality industry faces ongoing challenges such as theft, unauthorized access, crowd-related incidents, and operational disruptions. Reactive security addresses problems after they surface. Predictive e-surveillance, by contrast, focuses on early indicators, patterns and anomalies that suggest something may go wrong if left unattended. This shift aligns security with hospitality’s core promise: seamless care. What Predictive E-Surveillance Looks Like in Hotels Predictive e-surveillance combines AI-powered video analytics, behavioral modeling, and historical data to forecast risk. These systems continuously learn what “normal” looks like in a hotel environment, daily guest movement, staff routines, peak traffic times and flag deviations that may require attention. For example, predictive analytics can identify unusual loitering in corridors late at night, anticipate congestion in lobbies during check-in surges, or detect abnormal movement near restricted back-of-house areas. Instead of triggering alarms for every movement, the system prioritizes alerts based on context and likelihood of impact. Research published in IEEE Access shows that predictive video analytics significantly improve detection accuracy while reducing false alarms by focusing on behavior patterns rather than isolated events. For hotels, this means fewer disruptions and more meaningful interventions. Enhancing Guest Experience Through Anticipation The most powerful aspect of predictive surveillance is its impact on guest experience. When risks are anticipated, staff can act discreetly and proactively. For instance, if analytics predict crowd build-up near elevators after a conference session ends, hotel teams can redirect flow or deploy additional staff. If the system detects unusual activity near guest rooms, security can intervene quietly before guests are disturbed. Even safety-related incidents such as slips or medical emergencies, can be identified faster through behaviour and posture analysis. Cornell University’s School of Hotel Administration notes that guests value safety most when it is invisible yet effective. Predictive surveillance supports this by preventing incidents rather than responding visibly after they occur. Supporting Hotel Staff and Operations Hotels rely on coordination across multiple teams front desk, housekeeping, security, facilities, and food & beverage. Predictive e-surveillance supports these teams with operational intelligence. By analyzing movement patterns and space utilization, systems can highlight understaffed areas, delayed room servicing, or congestion in service corridors. This enables managers to make informed, real-time decisions that improve efficiency and reduce stress on staff. McKinsey research on hospitality operations emphasizes that data-driven decision-making leads to higher service consistency and lower operational costs. Surveillance analytics add a visual and behavioural dimension to this intelligence, helping hotels align security with service excellence. Loss Prevention Without Guest Discomfort Loss prevention remains an important concern for hotels, especially in high-traffic properties. Predictive surveillance strengthens asset protection without resorting to intrusive monitoring. AI models can identify suspicious behavior patterns, such as repeated access attempts to restricted areas or unusual movement near storage zones before losses occur. Alerts allow staff to intervene early and discreetly, reducing confrontation and maintaining a welcoming atmosphere. Deloitte’s hospitality risk studies highlight that proactive monitoring significantly lowers shrinkage and internal losses. When combined with predictive analytics, surveillance becomes a preventive tool rather than a visible deterrent. Privacy, Ethics, and Trust in Hospitality Surveillance Hotels are built on trust. Guests expect privacy in personal spaces and respect in shared ones. Predictive e-surveillance must therefore operate within strict ethical boundaries. Modern systems emphasize behaviour-based analytics over identity tracking. Private areas such as guest rooms and restrooms are excluded entirely. Data access is restricted, retention periods are defined, and usage is governed by policy. Frameworks such as GDPR and UNESCO’s Recommendation on the Ethics of Artificial Intelligence stress proportionality, transparency, and accountability. Clear communication through signage and privacy policies helps guests understand that surveillance exists to protect, not intrude. Ethical deployment ensures that predictive intelligence enhances hospitality rather than undermining it. The Role of IVIS in Predictive Hotel Surveillance As hotels adopt predictive surveillance, they need platforms capable of unifying intelligence across spaces while maintaining governance and scalability. This is where IVIS plays a meaningful role. IVIS enables hotels to centralize surveillance across lobbies, corridors, parking areas, and back-of-house zones into a single intelligent operational view. By combining real-time video analytics with historical pattern analysis, IVIS helps hotels anticipate risks and manage guest flow proactively. Its hybrid architecture spanning edge, on-prem, and cloud, ensures low-latency analytics on-site while supporting centralized oversight across multiple properties. Policy-driven controls and secure data handling align predictive capabilities with privacy and regulatory requirements. In hospitality environments, IVIS supports a shift from reactive security to anticipatory service, allowing hotels to think ahead without disrupting the guest experience. The Future of Predictive Surveillance in Hospitality Predictive e-surveillance will continue to evolve alongside smart hospitality technologies. Integration with property management systems, event schedules, and occupancy data will enable even more precise forecasting. Edge computing will allow instant local responses, while AI models will refine predictions over time. As hotels compete on experience rather than amenities alone, those that adopt predictive intelligence will stand out. The future of hotel security is not about control, it is about care delivered at the right moment. Conclusion Hotels that think ahead create experiences guests remember for the right reasons. Predictive e-surveillance enables this foresight by turning security into a silent partner in service delivery. By anticipating risks, managing flow, supporting staff, and respecting privacy, predictive surveillance aligns perfectly with hospitality’s core values. Platforms like IVIS demonstrate how hotels can adopt this